Edge Computing Platforms: Insights from Gartner’s 2024 Market Guide

Edge computing allows organizations to process data close to where it’s generated, such as in retail stores, industrial sites, and smart cities, with the goal of improving operational efficiency and reducing latency. However, edge computing requires a platform that can support the necessary software, management, and networking infrastructure. Let’s explore the 2024 Gartner Market Guide for Edge Computing, which highlights the drivers of edge computing and offers guidance for organizations considering edge strategies.

What is an Edge Computing Platform (ECP)?

Edge computing moves data processing close to where it’s generated. For bank branches, manufacturing plants, hospitals, and others, edge computing delivers benefits like reduced latency, faster response times, and lower bandwidth costs. An Edge Computing Platform (ECP) provides the foundation of infrastructure, management, and cloud integration that enable edge computing. The goal of having an ECP is to allow many edge locations to be efficiently operated and scaled with minimal, if any, human touch or physical infrastructure changes.

Before we describe ECPs in detail, it’s important to first understand why edge computing is becoming increasingly critical to IT and what challenges arise as a result.

What’s Driving Edge Computing, and What Are the Challenges?

Here are the five drivers of edge computing described in Gartner’s report, along with the challenges that arise from each:

1. Edge Diversity

Every industry has its unique edge computing requirements. For example, manufacturing often needs low-latency processing to ensure real-time control over production, while retail might focus on real-time data insights to deliver hyper-personalized customer experiences.

Challenge: Edge computing solutions are usually deployed to address an immediate need, without taking into account the potential for future changes. This makes it difficult to adapt to diverse and evolving use cases.

2. Ongoing Digital Transformation

Gartner predicts that by 2029, 30% of enterprises will rely on edge computing. Digital transformation is catalyzing its adoption, while use cases will continue to evolve based on emerging technologies and business strategies.

Challenge: This rapid transformation means environments will continue to become more complex as edge computing evolves. This complexity makes it difficult to integrate, manage, and secure the various solutions required for edge computing.

3. Data Growth

The amount of data generated at the edge is increasing exponentially due to digitalization. Initially, this data was often underutilized (referred to as the “dark edge”), but businesses are now shifting towards a more connected and intelligent edge, where data is processed and acted upon in real time.

Challenge: Enormous volumes of data make it difficult to efficiently manage data flows and support real-time processing without overwhelming the network or infrastructure.

4. Business-Led Requirements

Automation, predictive maintenance, and hyper-personalized experiences are key business drivers pushing the adoption of edge solutions across industries.

Challenge: Meeting business requirements poses challenges in terms of ensuring scalability, interoperability, and adaptability.

5. Technology Focus

Emerging technologies such as AI/ML are increasingly deployed at the edge for low-latency processing, which is particularly useful in manufacturing, defense, and other sectors that require real-time analytics and autonomous systems.

Challenge: AI and ML make it difficult for organizations to determine how to strike a balance between computing power and infrastructure costs, without sacrificing security.

What Features Do Edge Computing Platforms Need to Have?

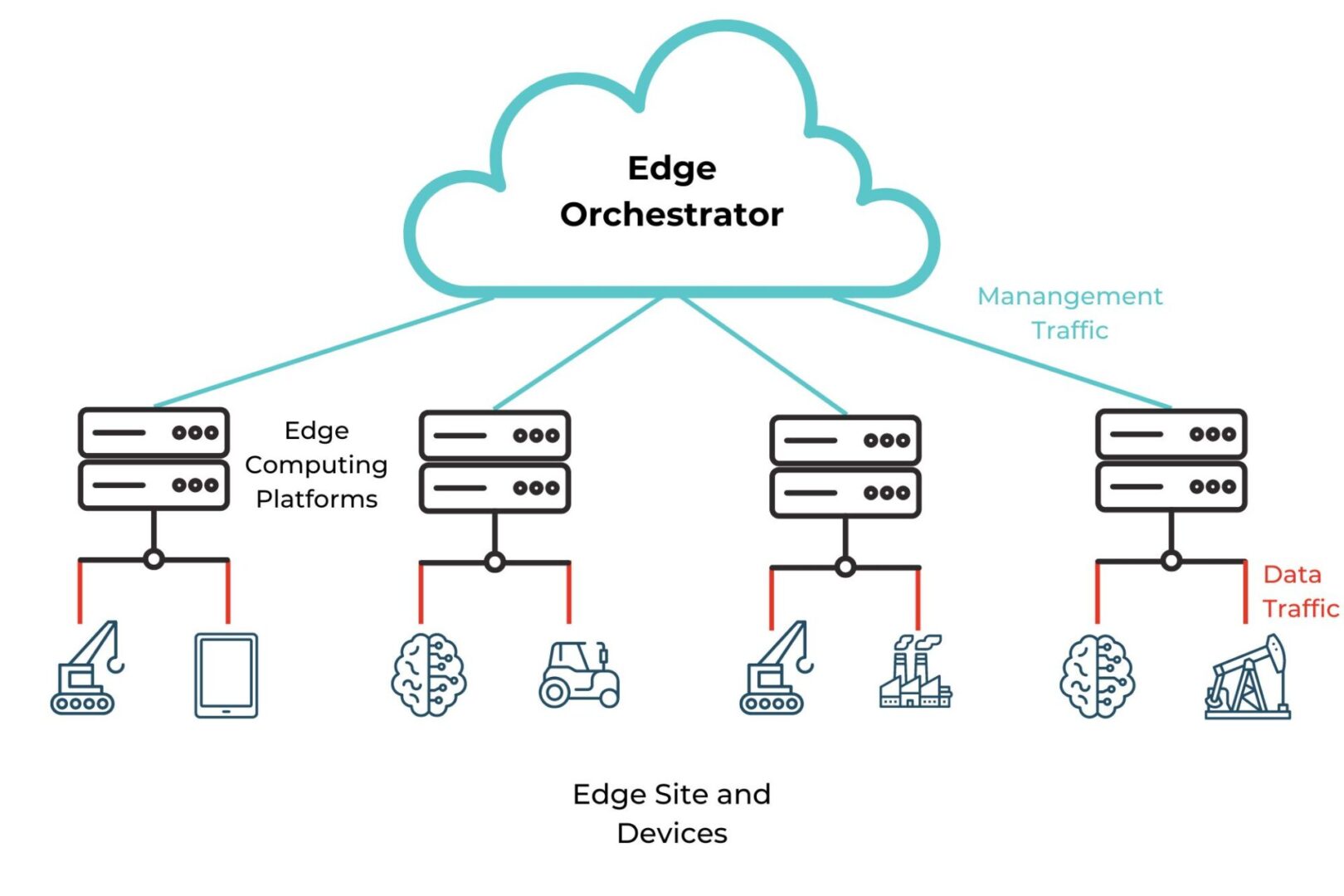

To address these challenges, here’s a brief look at three core features that ECPs need to have according to Gartner’s Market Guide:

- Edge Software Infrastructure: Support for edge-native workloads and infrastructure, including containers and VMs. The platform must be secure by design.

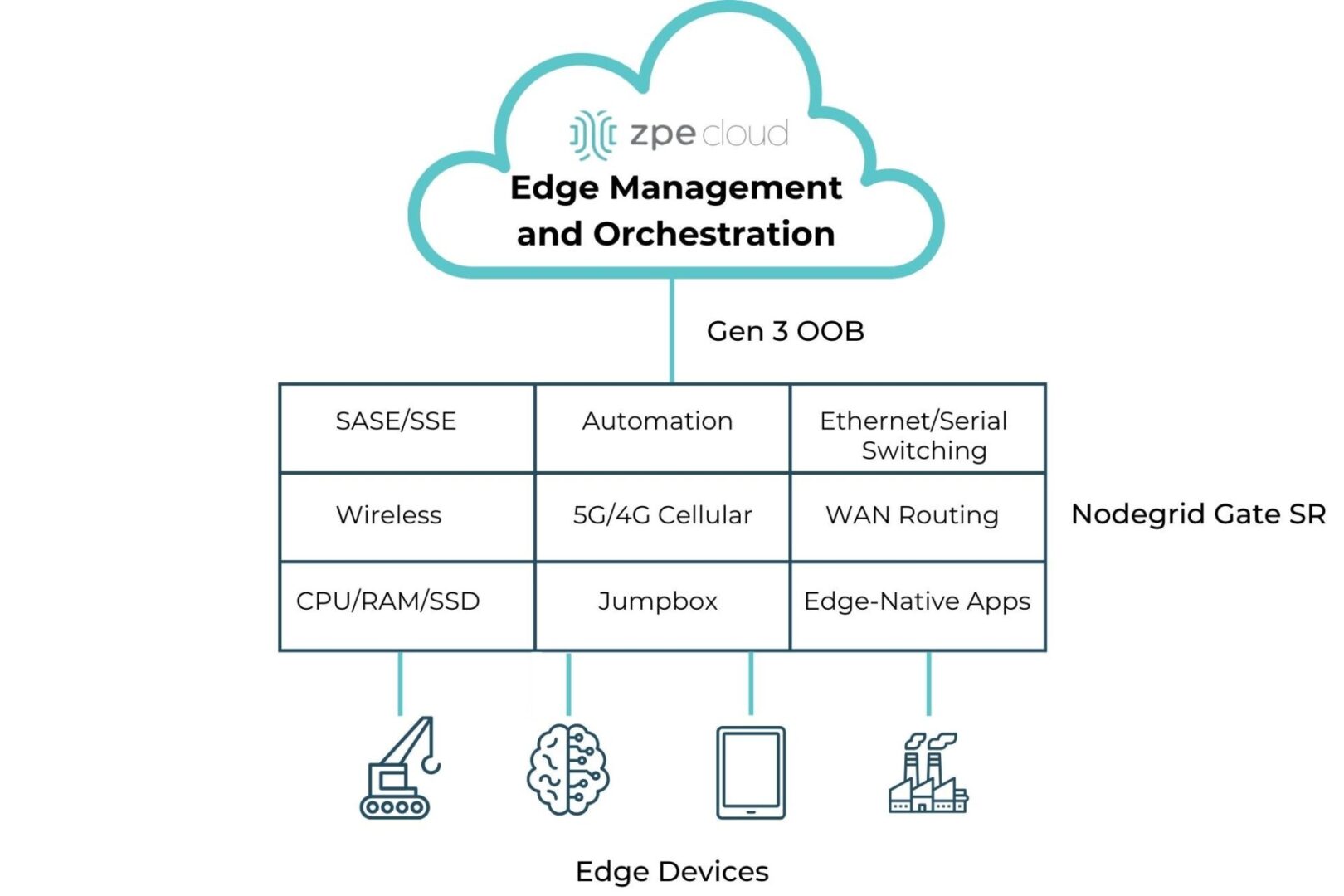

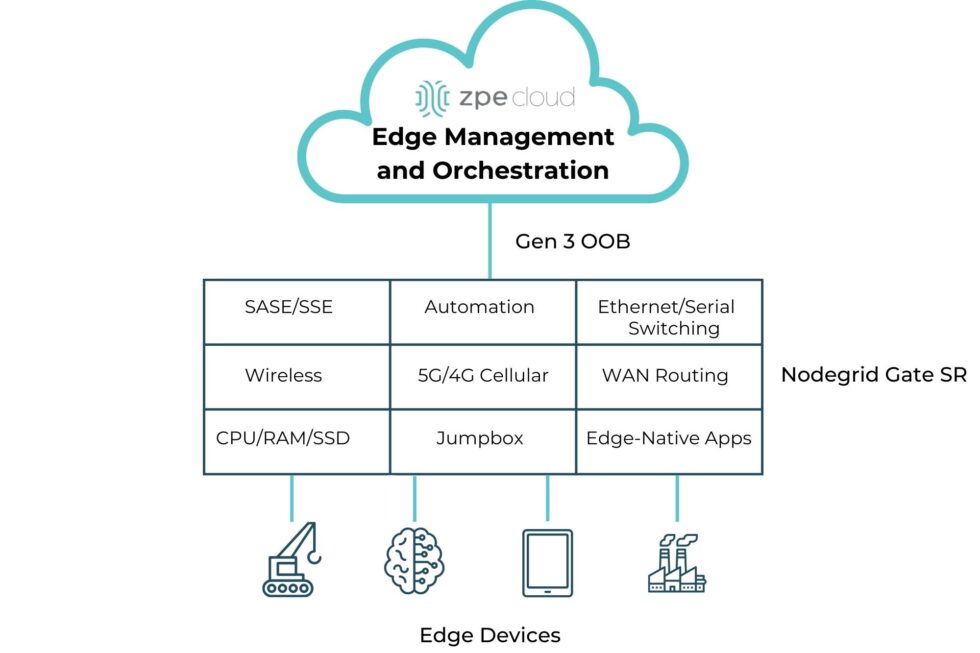

- Edge Management and Orchestration: Centralized management for the full software stack, including orchestration for app onboarding, fleet deployments, data storage, and regular updates/rollbacks.

- Cloud Integration and Networking: Seamless connection between edge and cloud to ensure smooth data flow and scalability, with support for upstream and downstream networking.

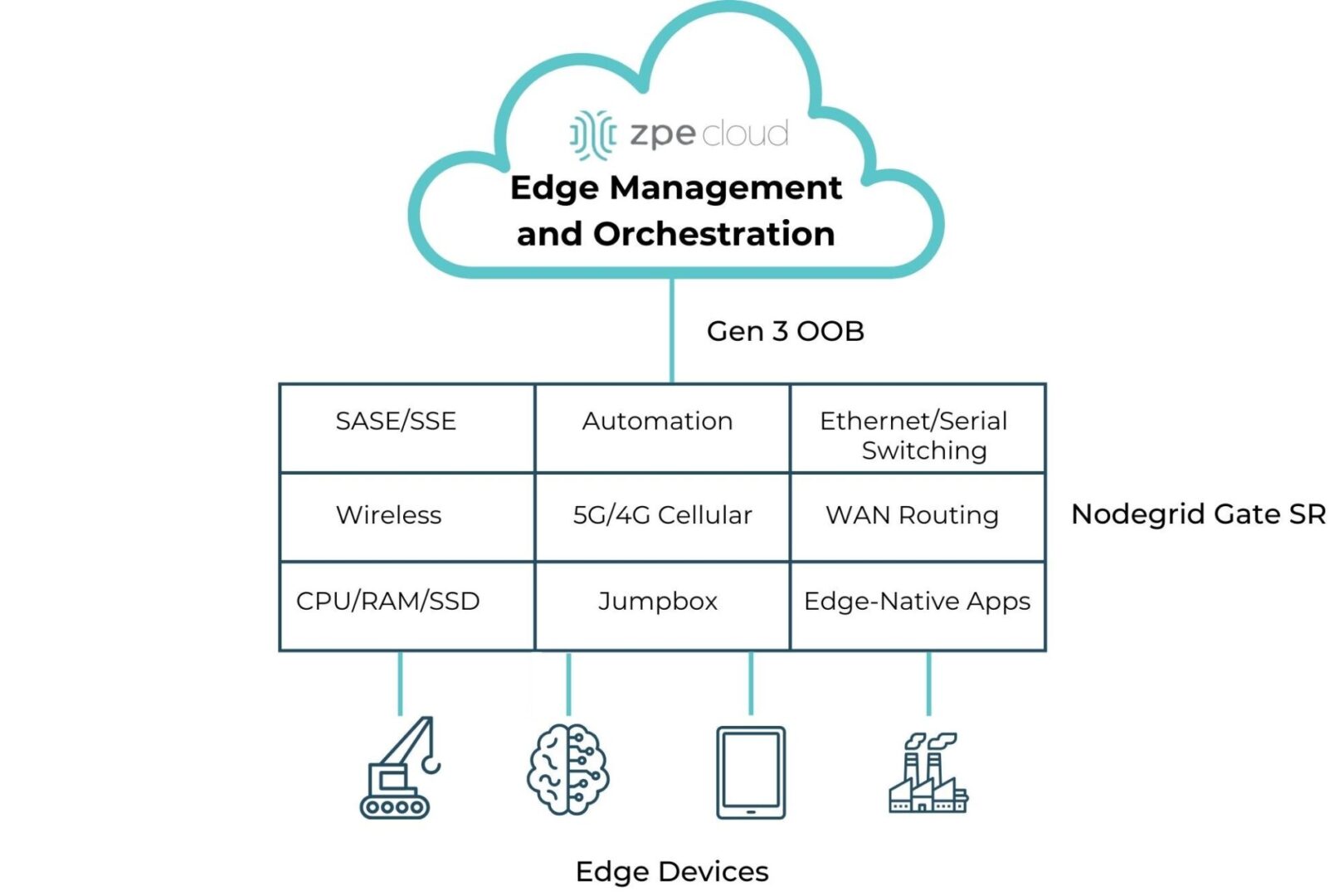

Image: A simple diagram showing the computing and networking capabilities that can be delivered via Edge Management and Orchestration.

How ZPE Systems’ Nodegrid Platform Addresses Edge Computing Challenges

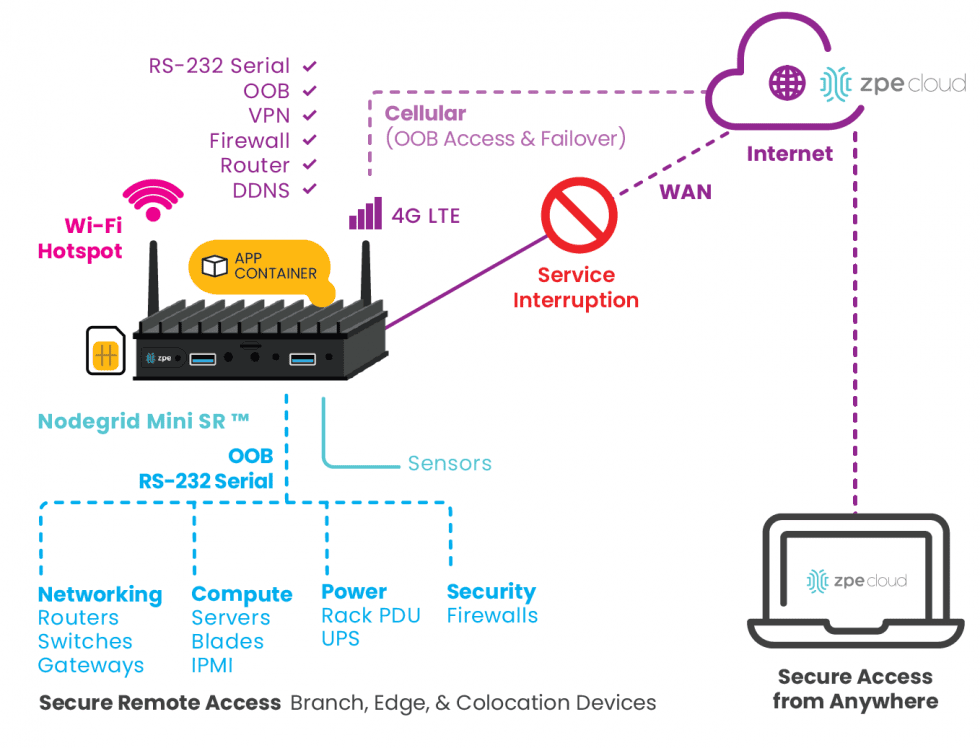

ZPE Systems’ Nodegrid is a Secure Service Delivery Platform that meets these needs. Nodegrid covers all three feature categories outlined in Gartner’s report, allowing organizations to host and manage edge computing via one platform. Not only is Nodegrid the industry’s most secure management infrastructure, but it also features a vendor-neutral OS, hypervisor, and multi-core Intel CPU to support necessary containers, VMs, and workloads at the edge. Nodegrid follows isolated management best practices that enable end-to-end orchestration and safe updates/rollbacks of global device fleets. Nodegrid integrates with all major cloud providers, and also features a variety of uplink types, including 5G, Starlink, and fiber, to address use cases ranging from setting up out-of-band access, to architecting Passive Optical Networking.

Here’s how Nodegrid addresses the five edge computing challenges:

1. Edge Diversity: Adapting to Industry-Specific Needs

Nodegrid is built to handle diverse requirements, with a flexible architecture that supports containerized applications and virtual machines. This architecture enables organizations to tailor the platform to their edge computing needs, whether for handling automated workflows in a factory or data-driven customer experiences in retail.

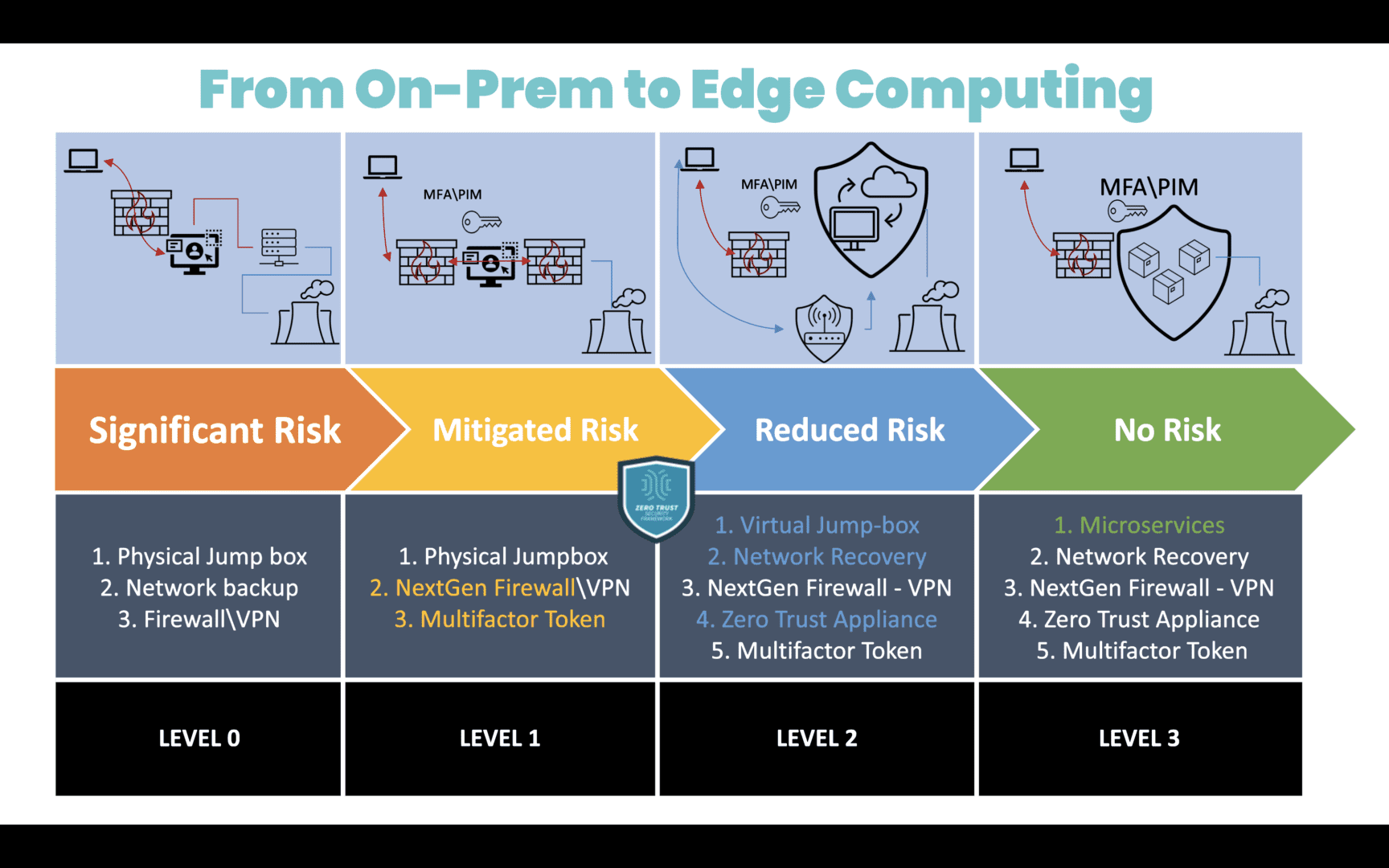

2. Ongoing Digital Transformation: Supporting Continuous Growth

Nodegrid supports ongoing digital transformation by providing zero-touch orchestration and management, allowing for remote deployment and centralized control of edge devices. This enables teams to perform initial setup of all infrastructure and services required for their edge computing use cases. Nodegrid’s remote access and automation provide a secure platform for keeping infrastructure up-to-date and optimized without the need for on-site staff. This helps organizations move much of their focus away from operations (“keeping the lights on”), and instead gives them the agility to scale their edge infrastructure to meet their business goals.

3. Data Growth: Enabling Real-Time Data Processing

Nodegrid addresses the challenge of exponential data growth by providing local processing capabilities, enabling edge devices to analyze and act on data without relying on the cloud. This not only reduces latency but also enhances decision-making in time-sensitive environments. For instance, Nodegrid can handle the high volumes of data generated by sensors and machines in a manufacturing plant, providing instant feedback for closed-loop automation and improving operational efficiency.

4. Business-Led Requirements: Tailored Solutions for Industry Demands

Nodegrid’s hardware and software are designed to be adaptable, allowing businesses to scale across different industries and use cases. In manufacturing, Nodegrid supports automated workflows and predictive maintenance, ensuring equipment operates efficiently. In retail, it powers hyperpersonalization, enabling businesses to offer tailored customer experiences through edge-driven insights. The vendor-neutral Nodegrid OS integrates with existing and new infrastructure, and the Net SR is a modular appliance that allows for hot-swapping of serial, Ethernet, computing, storage, and other capabilities. Organizations using Nodegrid can adapt to evolving use cases without having to do any heavy lifting of their infrastructure.

5. Technology Focus: Supporting Advanced AI/ML Applications

Emerging technologies such as AI/ML require robust edge platforms that can handle complex workloads with low-latency processing. Nodegrid excels in environments where real-time analytics and autonomous systems are crucial, offering high-performance infrastructure designed to support these advanced use cases. Whether processing data for AI-driven decision-making in defense or enabling real-time analytics in industrial environments, Nodegrid provides the computing power and scalability needed for AI/ML models to operate efficiently at the edge.

Read Gartner’s Market Guide for Edge Computing Platforms

As businesses continue to deploy edge computing solutions to manage increasing data, reduce latency, and drive innovation, selecting the right platform becomes critical. The 2024 Gartner Market Guide for Edge Computing Platforms provides valuable insights into the trends and challenges of edge deployments, emphasizing the need for scalability, zero-touch management, and support for evolving workloads.

Click below to download the report.

Get a Demo of Nodegrid’s Secure Service Delivery

Our engineers are ready to walk you through the software infrastructure, edge management and orchestration, and cloud integration capabilities of Nodegrid. Use the form to set up a call and get a hands-on demo of this Secure Service Delivery Platform.

Nodegrid’s OOB also ensures remote teams have 24/7 access to manage, troubleshoot, and recover edge deployments even during a major network outage or ransomware infection. Plus, Nodegrid’s ability to host Guest OS, including Docker containers and VNFs, allows companies to consolidate an entire edge networking stack in a single platform. Nodegrid devices like the

Nodegrid’s OOB also ensures remote teams have 24/7 access to manage, troubleshoot, and recover edge deployments even during a major network outage or ransomware infection. Plus, Nodegrid’s ability to host Guest OS, including Docker containers and VNFs, allows companies to consolidate an entire edge networking stack in a single platform. Nodegrid devices like the