Applications of Edge Computing

The edge computing market is huge and continuing to grow. A recent study projected that spending on edge computing will reach $232 billion in 2024. Organizations across nearly every industry are taking advantage of edge computing’s real-time data processing capabilities to get immediate business insights, respond to issues at remote sites before they impact operations, and much more. This blog discusses some of the applications of edge computing for industries like finance, retail, and manufacturing, and provides advice on how to get started.

What is edge computing?

Edge computing involves decentralizing computing capabilities and moving them to the network’s edges. Doing so reduces the number of network hops between data sources and the applications that process and use that data, which mitigates latency, bandwidth, and security concerns compared to cloud or on-premises computing.

Learn more about edge computing vs cloud computing or edge computing vs on-premises computing.

Edge computing often uses edge-native applications that are built from the ground up to harness edge computing’s unique capabilities and overcome its limitations. Edge-native applications leverage some cloud-native principles, such as containers, microservices, and CI/CD. However, unlike cloud-native apps, they’re designed to process transient, ephemeral data in real time with limited computational resources. Edge-native applications integrate seamlessly with the cloud, upstream resources, remote management, and centralized orchestration, but can also operate independently as needed.

.

Applications of edge computing

Financial services

The financial services industry collects a lot of edge data from bank branches, web and mobile apps, self-service ATMs, and surveillance systems. Many firms feed this data into AI/ML-powered data analytics software to gain insights into how to improve their services and generate more revenue. Some also use AI-powered video surveillance systems to analyze video feeds and detect suspicious activity. However, there are enormous security, regulatory, and reputational risks involved in transmitting this sensitive data to the cloud or an off-site data center.

Financial institutions can use edge computing to move data analytics applications to branches and remote PoPs (points of presence) to help mitigate the risks of transmitting data off-site. Additionally, edge computing enables real-time data analysis for more immediate and targeted insights into customer behavior, branch productivity, and security. For example, AI surveillance software deployed at the edge can analyze live video feeds and alert on-site security personnel about potential crimes in progress.

Industrial manufacturing

Many industrial manufacturing processes are mostly (if not completely) automated and overseen by operational technology (OT), such as supervisory control and data acquisition systems (SCADA). Logs from automated machinery and control systems are analyzed by software to monitor equipment health, track production costs, schedule preventative maintenance, and perform quality assurance (QA) on components and products. However, transferring that data to the cloud or centralized data center increases latency and creates security risks.

Manufacturers can use edge computing to analyze OT data in real time, gaining faster insights and catching potential issues before they affect product quality or delivery schedules. Edge computing also allows industrial automation and monitoring processes to continue uninterrupted even if the site loses Internet access due to an ISP outage, natural disaster, or other adverse event in the region. Edge resilience can be further improved by deploying an out-of-band (OOB) management solution like Nodegrid that enables control plane/data plane isolation (also known as isolated management infrastructure), as this will give remote teams a lifeline to access and recover OT systems.

Retail operations

In the age of one-click online shopping, the retail industry has been innovating with technology to enhance the in-store experience, improve employee productivity, and keep operating costs down. Retailers have a brief window of time to meet a customer’s needs before they look elsewhere, and edge computing’s ability to leverage data in real time is helping address that challenge. For example, some stores place QR codes on shelves that customers can scan if a product is out of stock, alerting a nearby representative to provide immediate assistance.

Another retail application of edge computing is enhanced inventory management. An edge computing solution can make ordering recommendations based on continuous analysis of purchasing patterns over time combined with real-time updates as products are purchased or returned. Retail companies, like financial institutions, can also use edge AI/ML solutions to analyze surveillance data and aid in loss prevention.

Healthcare

The healthcare industry processes massive amounts of data generated by medical equipment like insulin pumps, pacemakers, and imaging devices. Patient health data can’t be transferred over the open Internet, so getting it to the cloud or data center for analysis requires funneling it through a central firewall via MPLS (for hospitals, clinics, and other physical sites), overlay networks, or SD-WAN (for wearable sensors and mobile EMS devices). This increases the number of network hops and creates a traffic bottleneck that prevents real-time patient monitoring and delays responses to potential health crises.

Edge computing for healthcare allows organizations to process medical data on the same local network, or even the same onboard chip, as the sensors and devices that generate most of the data. This significantly reduces latency and mitigates many of the security and compliance challenges involved in transmitting regulated health data offsite. For example, an edge-native application running on an implanted heart-rate monitor can operate without a network connection much of the time, providing the patient with real-time alerts so they can modify their behavior as needed to stay healthy. If the app detects any concerning activity, it can use multiple cellular and ATT FirstNet connections to alert the cardiologist without exposing any private patient data.

Oil, gas, & mining

Oil, gas, and other mining operations use IoT sensors to monitor flow rates, detect leaks, and gather other critical information about equipment deployed in remote sites, drilling rigs, and offshore platforms all over the world. Drilling rigs are often located in extremely remote or even human-inaccessible locations, so ensuring reliable communications with monitoring applications in the cloud or data center can be difficult. Additionally, when networks or systems fail, it can be time-consuming and expensive – not to mention risky – to deploy IT teams to fix the issue on-site.

The energy and mining industries can use edge computing to analyze data in real time even in challenging deployment environments. For example, companies can deploy monitoring software on cellular-enabled edge computing devices to gain immediate insights into equipment status, well logs, borehole logs, and more. This software can help establish more effective maintenance schedules, uncover production inefficiencies, and identify potential safety issues or equipment failures before they cause larger problems. Edge solutions with OOB management also allow IT teams to fix many issues remotely, using alternative cellular interfaces to provide continuous access for troubleshooting and recovery.

AI & machine learning

Artificial intelligence (AI) and machine learning (ML) have broad applications across many industries and use cases, but they’re all powered by data. That data often originates at the network’s edges from IoT devices, equipment sensors, surveillance systems, and customer purchases. Securely transmitting, storing, and preparing edge data for AI/ML ingestion in the cloud or centralized data center is time-consuming, logistically challenging, and expensive. Decentralizing AI/ML’s computational resources and deploying them at the edge can significantly reduce these hurdles and unlock real-time capabilities.

For example, instead of deploying AI on a whole rack of GPUs (graphics processing units) in a central data center to analyze equipment monitoring data for all locations, a manufacturing company could use small edge computing devices to provide AI-powered analysis for each individual site. This would reduce bandwidth costs and network latency, enabling near-instant insights and providing an accelerated return on the investment into artificial intelligence technology.

AIOps can also be improved by edge computing. AIOps solutions analyze monitoring data from IT devices, network infrastructure, and security solutions and provide automated incident management, root-cause analysis, and simple issue remediation. Deploying AIOps on edge computing devices enables real-time issue detection and response. It also ensures continuous operation even if an ISP outage or network failure cuts off access to the cloud or central data center, helping to reduce business disruptions at vital branches and other remote sites.

Getting started with edge computing

The edge computing market has focused primarily on single-use-case solutions designed to solve specific business problems, forcing businesses to deploy many individual applications across the network. This piecemeal approach to edge computing increases management complexity and risk while decreasing operational efficiency.

The recommended approach is to use a centralized edge management and orchestration (EMO) platform to monitor and control edge computing operations. The EMO should be vendor-agnostic and interoperate with all the edge computing devices and edge-native applications in use across the organization. The easiest way to ensure interoperability is to use vendor-neutral edge computing platforms to run edge-native apps and AI/ML workflows.

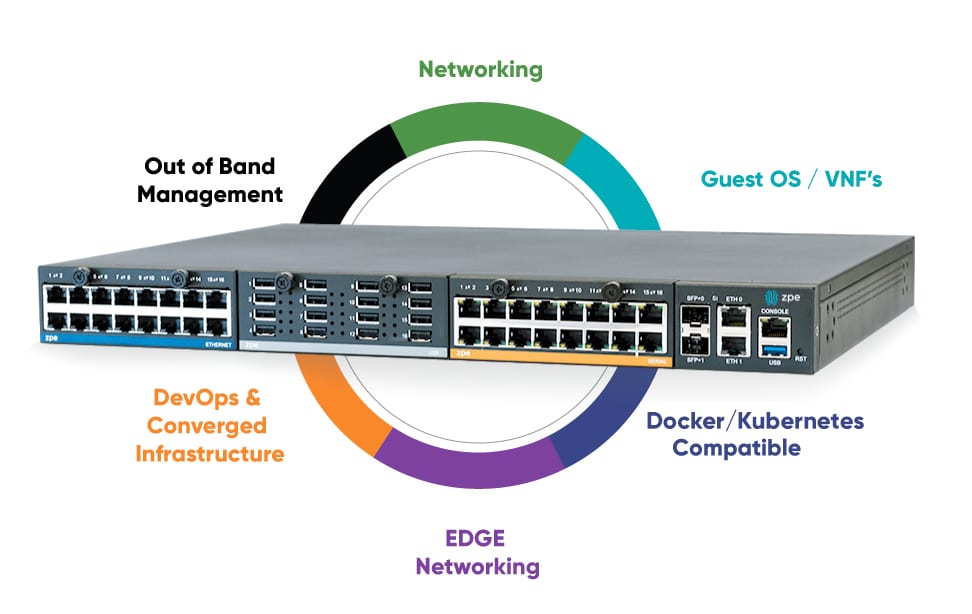

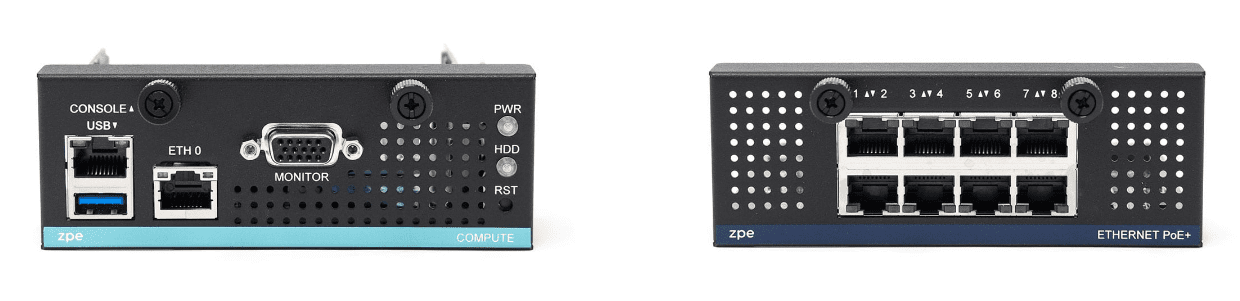

For example, the Nodegrid platform from ZPE Systems provides the perfect vendor-neutral foundation for edge operations. Nodegrid integrated branch services routers like the Gate SR with integrated Nvidia Jetson Nano use the open, Linux-based Nodegrid OS, which can host Docker containers and edge-native applications for third-party AI, ML, data analytics, and more. These devices use out-of-band management to provide 24/7 remote visibility, management, and troubleshooting access to edge deployments, even in challenging environments like offshore oil rigs. Nodegrid’s cloud-based or on-premises software provides a single pane of glass to orchestrate operations at all edge computing sites.

Streamline your edge computing deployment with Nodegrid

The vendor-neutral Nodegrid platform can simplify all applications of edge computing with easy interoperability, reduced hardware overhead, and centralized edge management and orchestration. Schedule a Nodegrid demo to learn more.

Schedule a Demo

All

All