7 Security Benefits of Implementing FIPS 140-3 for Out-of-Band Management

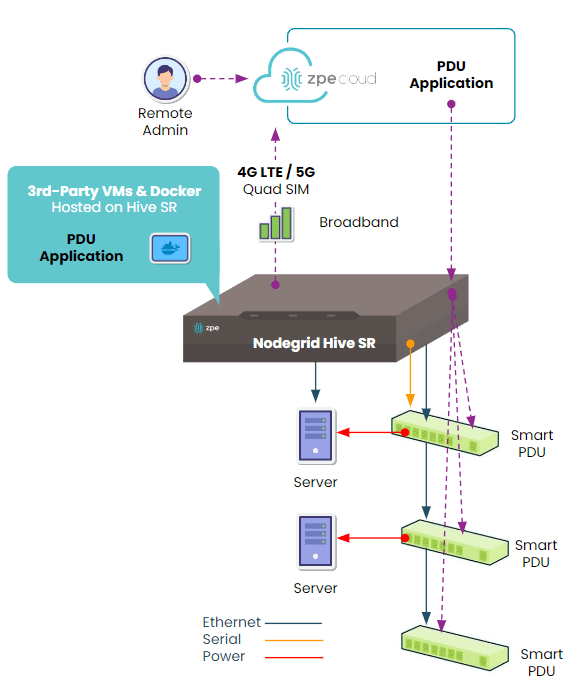

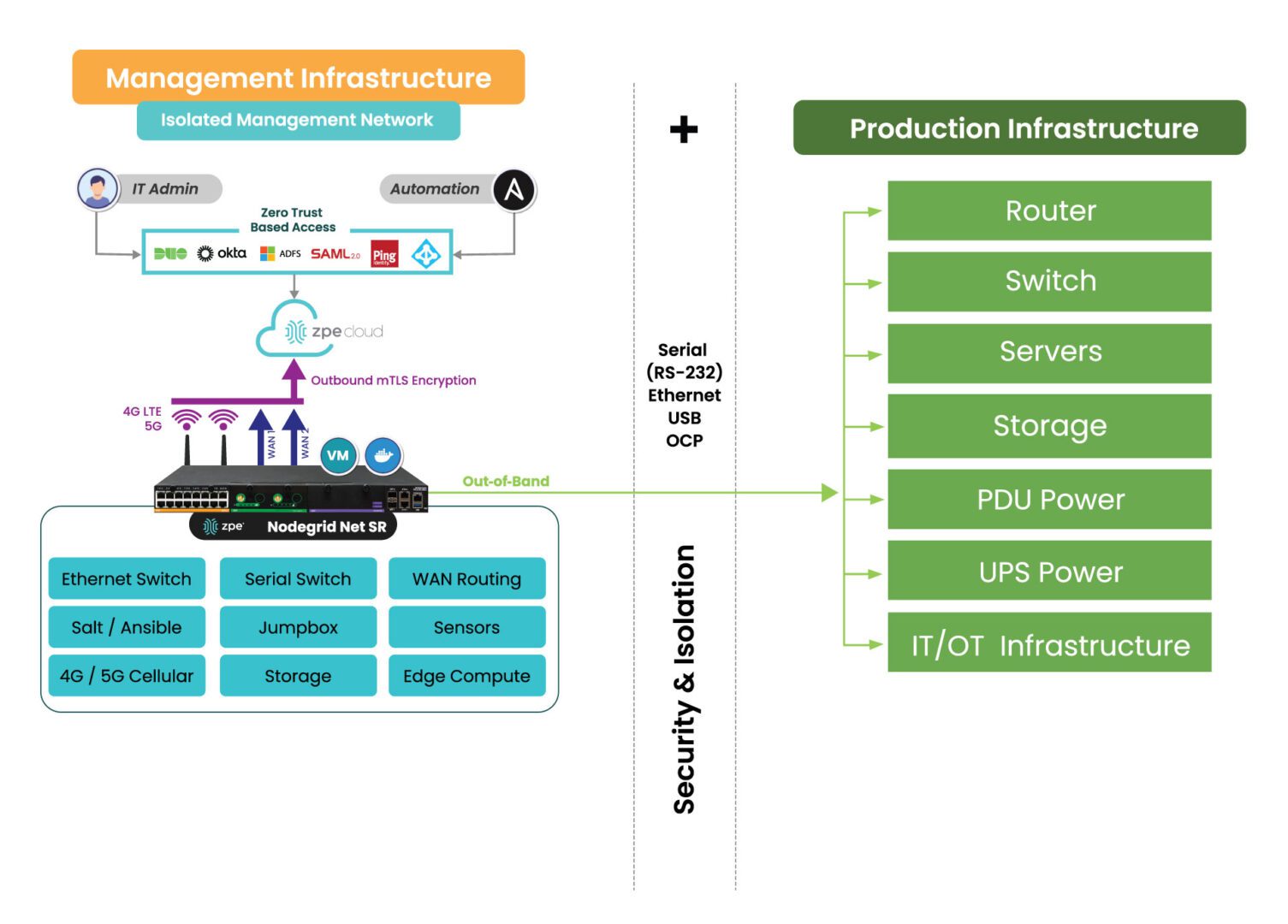

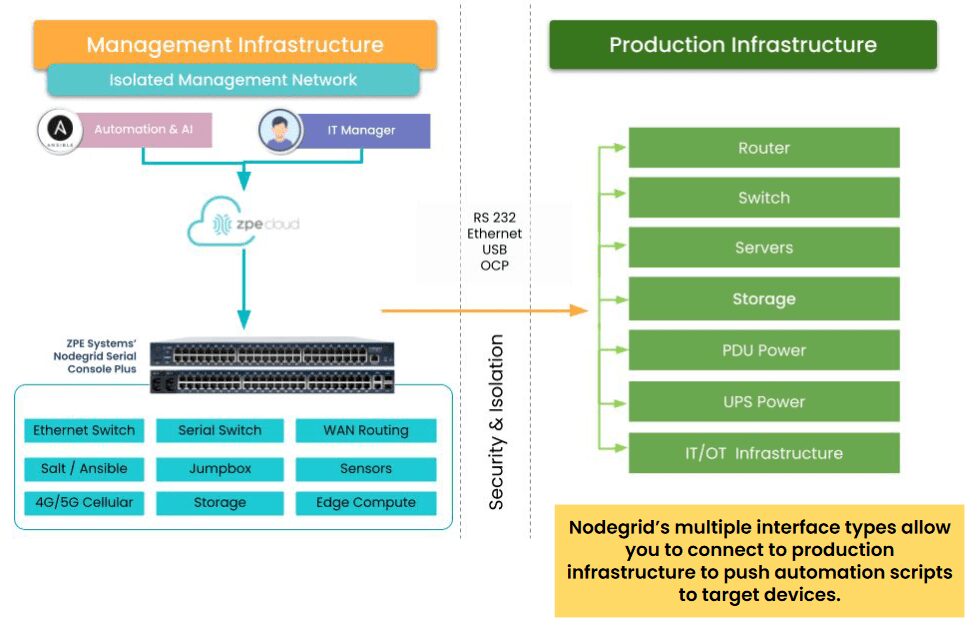

Out-of-band (OOB) management is essential for maintaining control over critical network infrastructure, especially during outages or cyberattacks. This separate management network enables administrators to remotely access, troubleshoot, and recover production equipment. However, managing network devices outside the main data path also brings unique security challenges, as these channels often carry sensitive control data and system access credentials.

Implementing FIPS 140-3-certified encryption within OOB systems can help organizations secure this vital access path to ensure that management data can’t be intercepted or manipulated by unauthorized actors. Here’s how FIPS 140-3 certification can enhance the security, reliability, and compliance of your out-of-band management.

What is FIPS 140-3 Certification?

FIPS (Federal Information Processing Standard) 140-3 is a high-level security standard developed by the National Institute of Standards and Technology (NIST). It specifies rigorous requirements for cryptographic modules used to protect sensitive data. FIPS 140-3 certification covers everything from data encryption to user authentication and physical security. For out-of-band management, FIPS 140-3 certification ensures that cryptographic components in hardware, software, and firmware meet stringent data security standards.

By implementing FIPS-certified solutions, organizations can ensure their OOB management is resilient against modern cyber threats, protecting both the control channels and the sensitive data they carry. Here are seven security benefits of implementing FIPS 140-3 for out-of-band management.

7 Security Benefits of Implementing FIPS 140-3 for Out-of-Band Management

1. Secure Encryption of Management Traffic

OOB management often involves remote access to routers, switches, servers and other critical devices. FIPS 140-3 certification guarantees that all cryptographic modules used in these systems have been rigorously tested to secure data in transit. Encrypting management traffic is crucial to prevent interception or manipulation by unauthorized users, particularly for tasks such as command execution, configuration updates, and device monitoring.

With FIPS-certified encryption, companies can protect OOB traffic between management devices and network components, so that only authorized administrators have access to sensitive system commands and device settings.

2. Enhanced Authentication and Access Control

OOB management solutions typically support different user roles, each with its own access privileges. FIPS 140-3-certified modules, like ZPE Systems’ Nodegrid, feature multi-factor authentication (MFA) to control who can initiate OOB management sessions. Certified solutions also include secure key management practices that prevent unauthorized access, ensuring that only verified users can control and modify network devices.

These protections mean FIPS-certified solutions help mitigate the risk of unauthorized users accessing high-value assets. This is especially important during ransomware recovery efforts, when teams need to launch a secure, Isolated Recovery Environment to combat an active attack in a compromised environment.

3. Protection Against Tampering and Physical Attacks

Many organizations deploy IT infrastructure in locations where physical device security is lacking. For example, remote colocations, unmonitored drilling sites, or rural health clinics can easily expose network infrastructure to device tampering. FIPS 140-3 certification mandates tamper-evident and tamper-resistant features to protect the cryptographic modules used in OOB systems. OOB solutions like ZPE Systems’ Nodegrid provide robust protection against tampering, with features including:

- UEFI secure boot: Prevents the execution of unauthorized software during the boot process.

- TPM 2.0: Ensures secure key generation and storage, so only authorized software can run.

- Secure erase: Allows for deletion of all data from storage, so no data can be recovered from devices that have been tampered with.

These features prevent unauthorized individuals from physically accessing OOB equipment to intercept or modify management traffic. In remote and edge locations, FIPS-certified cryptographic modules provide robust protection against physical attacks, making it harder for adversaries to compromise OOB management pathways.

4. Compliant and Secure Logging of Access Activities

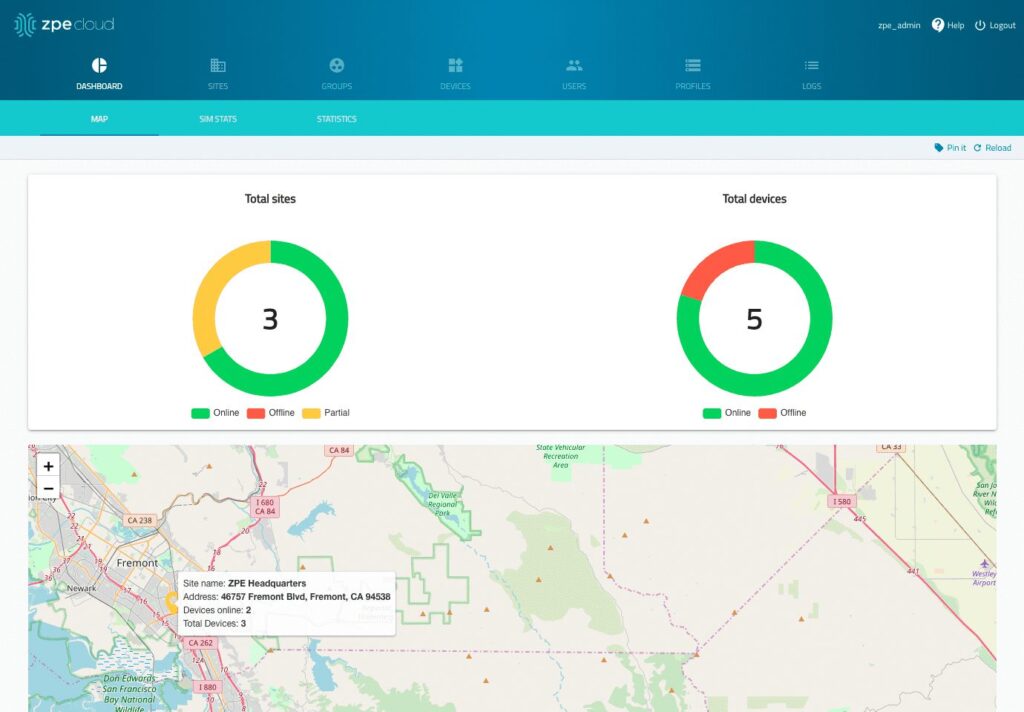

Because OOB management systems provide access to critical equipment, organizations need transparency into OOB users and their management activities. This means logging and auditing are essential to maintaining security and compliance. FIPS 140-3-certified modules support secure logging of all management activities, creating a clear audit trail of access attempts and security events. These logs are stored securely to prevent unauthorized users from altering or erasing them, providing valuable insights for security monitoring and incident response.

Secure logging is not only critical for monitoring access but also necessary for meeting regulatory compliance. FIPS 140-3 ensures that OOB management systems can satisfy audit requirements, making compliance easier and protecting organizations from potential regulatory penalties.

5. Meeting Regulatory Requirements in Sensitive Environments

Many industries handle sensitive data, especially government, healthcare, and finance. For organizations in these industries, it’s often mandatory to use FIPS-certified cryptographic solutions. FIPS 140-3 certification helps OOB management systems align with federal security regulations and standards like HIPAA and PCI-DSS. By deploying FIPS-certified encryption, organizations can comply with these standards, streamline audits, reduce the risk of regulatory penalties, and reinforce trust with customers.

6. Consistent Security Across Main and OOB Networks

It’s easy for organizations to focus mostly on securing the main network, while overlooking the security protections that they employ on their out-of-band network. FIPS-certified solutions help establish consistent security standards across both paths. This is especially important in protecting against lateral attacks, where hackers infiltrate one network and are then able to jump to the other. In cases where attackers gain access to one segment of the network, matching security protocols across the main and OOB networks prevents them from moving laterally into sensitive management channels.

Using FIPS 140-3-certified encryption across both networks also strengthens the organization’s ability to monitor, manage, and control devices, even when the primary network is under threat.

7. Securing Remote and Edge Devices

For organizations with remote infrastructure, such as telecom and retail, OOB management is critical for managing network devices in distant locations. However, these environments often lack the physical security of centralized data centers, making them vulnerable to tampering. FIPS-certified solutions ensure that all communication with remote OOB devices is encrypted, which protects management data from unauthorized access.

FIPS 140-3 certification also supports the resilience of IoT and edge devices, which often require OOB management for secure monitoring, patching, and configuration.

Implement the Most Secure Out-of-Band Management with ZPE Systems

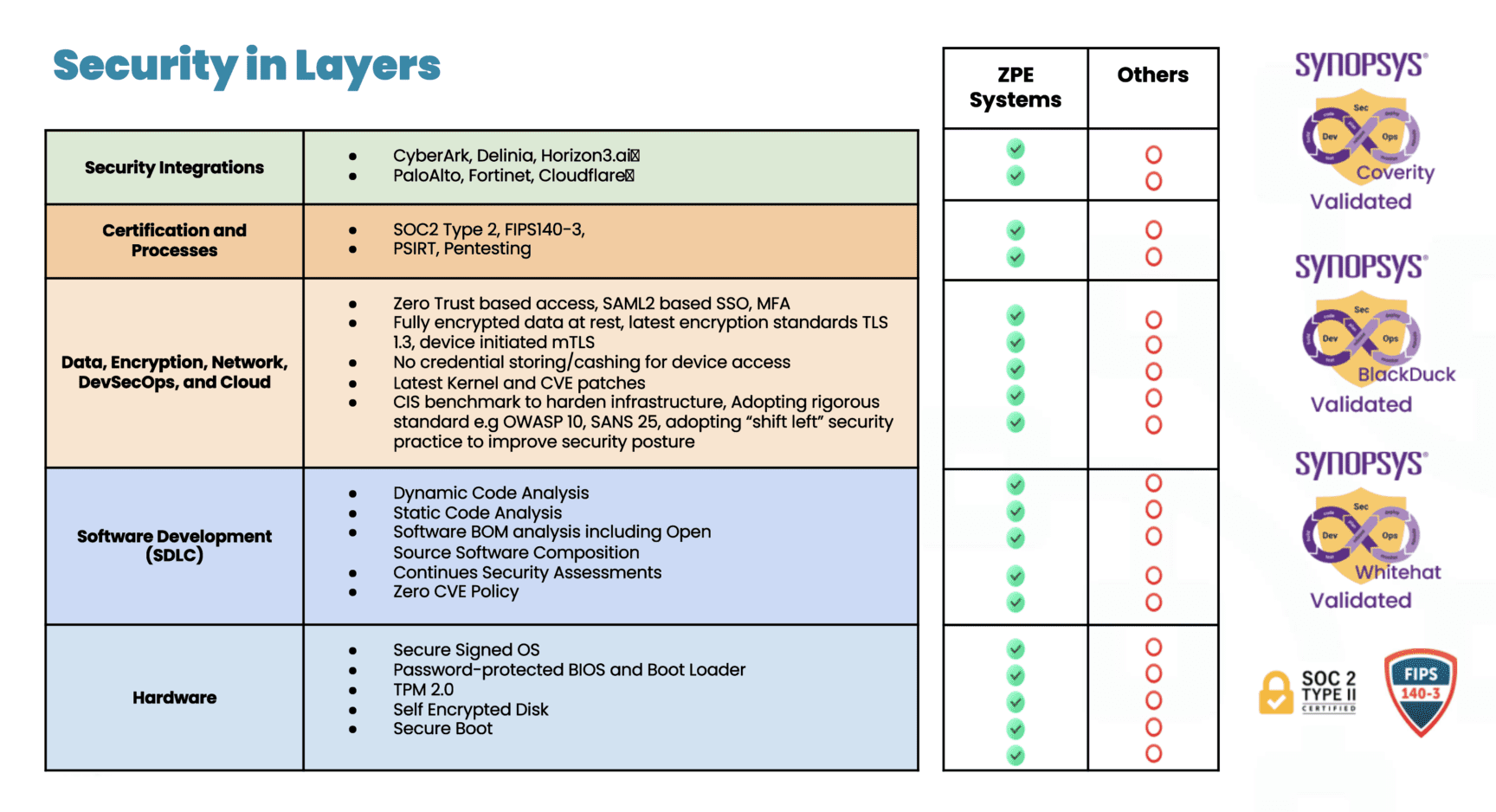

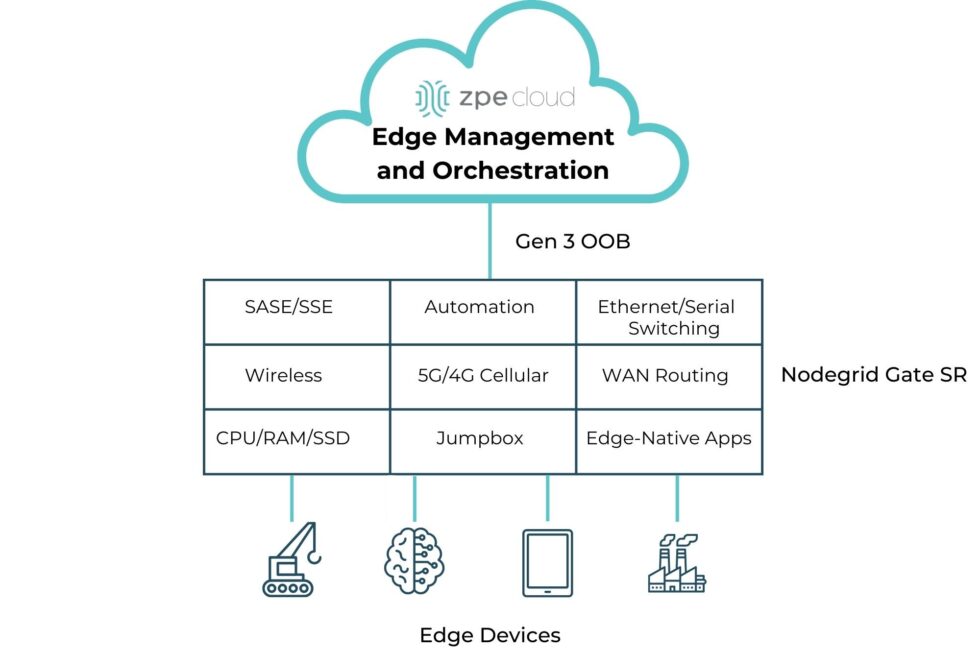

ZPE Systems’ Nodegrid is the industry’s most secure out-of-band management solution. Not only do we carry FIPS 140-3, SOC 2 Type 2, and ISO27001 certifications, but we also feature a Synopsys-validated codebase and dozens of security features across the hardware, software, and cloud layers. These are all part of a multi-layered, secure-by-design approach that ensures the strongest physical and cyber safeguards.

Download our pdf to explore more of our security assurance.

See FIPS-Certified Out-of-Band in Action

Our engineers are ready to walk you through our industry-leading out-of-band management. Use the button below to set up a 15-minute demo and explore FIPS 140-3 security features first-hand.